|

|

@@ -1,386 +1,12 @@

|

|

|

-== Getting Up ==

|

|

|

+= What is this? =

|

|

|

|

|

|

-=== First Things First ===

|

|

|

+This is a small repository of suggestions and guidelines intended to help you getting to experiment with simple ML models.

|

|

|

|

|

|

-You want to at least get the terminology right. Use a reference such as https://www.manning.com/books/deep-learning-with-python-third-edition[_"Deep Learning with Python"_ by François Chollet], specifically chapter 1, _"What is Deep Learning?"_

|

|

|

+These are the docs:

|

|

|

|

|

|

-You want to understand how the technology works. From the same book, chapter 2, _"The Mathematical Building Blocks of Neural Networks"_ does a great job at explaining it without too much academic distraction.

|

|

|

-

|

|

|

-=== Get a Look at the Landscape ===

|

|

|

-

|

|

|

-There are so many different layers of projects, tools, and libraries.

|

|

|

-

|

|

|

-Just looking at Python's most popular ML frameworks:

|

|

|

-

|

|

|

-* https://scikit-learn.org/stable/index.html[SciKit Learn]

|

|

|

-* https://pytorch.org/[PyTorch]

|

|

|

-* https://www.tensorflow.org/[TensorFlow]

|

|

|

-

|

|

|

-Additionally, https://keras.io/[Keras] is a multi-framework ML frontend that can work with TensorFlow, PyTorch, SciKit-Learn, JAX, and others.

|

|

|

-

|

|

|

-The challenge is this is the middle layer nowadays, not even the topmost any more.

|

|

|

-

|

|

|

-These frameworks all come with their own tools to make the jobs of working with data and training models easier:

|

|

|

-

|

|

|

-* SciKit has a https://scikit-learn.org/stable/related_projects.html[related projects page]

|

|

|

-* PyTorch has an https://landscape.pytorch.org/[entire ecosystem of supporting tools]

|

|

|

-* TensorFlow has https://www.tensorflow.org/resources/libraries-extensions[the same thing]

|

|

|

-

|

|

|

-The lower layers are libraries you will use to work with data, such as:

|

|

|

-

|

|

|

-* https://numpy.org/[NumPy]

|

|

|

-* https://pandas.pydata.org/[Pandas] (based on NumPy)

|

|

|

-* https://matplotlib.org/[Matplotlib] (for drawing simple graphs)

|

|

|

-

|

|

|

-Core Python data types and libraries are an even lower layer. A course such as https://www.redhat.com/en/services/training/ad141-red-hat-training-presents-introduction-to-python-programming[AD141] might help you speed up through this lowest layer.

|

|

|

-

|

|

|

-There will generally be some intermediate-level tools like:

|

|

|

-

|

|

|

-* https://scipy.org/[SciPy], based on NumPy, for more efficient data processing, adding some high-level functions

|

|

|

-* https://seaborn.pydata.org/[Seaborn], based on Matplotlib, for richer visualisation

|

|

|

-* https://plotly.com/python/[Plotly], another high-level visualisation library

|

|

|

-

|

|

|

-The higher layers involve job scheduling frameworks like:

|

|

|

-

|

|

|

-* https://www.ray.io/[Ray], infrastructure orchestration for distributed workloads

|

|

|

-* https://codeflare.dev/[CodeFlare], distributed serverless machine learning

|

|

|

-

|

|

|

-Additionally, building on top of pre-trained models:

|

|

|

-

|

|

|

-* https://huggingface.co/docs/transformers/index[Transformers], an LLM-oriented library of pre-trained models built on top of lower-level packages (TF, PyTorch)

|

|

|

-

|

|

|

-Frameworks for creating applications:

|

|

|

-

|

|

|

-* https://www.langchain.com/[LangChain], a framework for creating LLM-based applications

|

|

|

-* https://github.com/i-am-bee/beeai-framework[Bee Agent Framework], a framework to build applications based on AI agents (Agentic AI) that can make decisions and perform tasks autonomously

|

|

|

-

|

|

|

-There will then be special-purpose libraries, such as:

|

|

|

-

|

|

|

-* https://github.com/dmlc/xgboost[XGBoost], a multi-language regularising gradient-boosting library

|

|

|

-* https://lightgbm.readthedocs.io/en/stable/[LightGBM], another gradient boosting framework

|

|

|

-

|

|

|

-MLOps is another can of worms:

|

|

|

-

|

|

|

-* https://www.kubeflow.org/[KubeFlow] for Kubernetes-related integrations (included in RHOAI)

|

|

|

-* https://github.com/elyra-ai/elyra[Elyra], integrating with JupyterLab Notebooks

|

|

|

-

|

|

|

-Don't worry - start small and when you outgrow the sandbox, look at your project and listen to what sounds like the most interesting direction to go in at the moment, and then look around to see what tools you can learn about to expand.

|

|

|

-

|

|

|

-We all needed years to get our sense of direction in this story. There are no shortcuts, just fun along the way.

|

|

|

-

|

|

|

-=== Get Some Samples ===

|

|

|

-

|

|

|

-Have a look at some very simple datasets to start with.

|

|

|

-

|

|

|

-A couple of great types of models to start learning with are regression (prediction) and classification (shape recognition, text analysis).

|

|

|

-

|

|

|

-The data you need for those is typically very different. Try figuring out why and what kind of models a certain type of data supports well.

|

|

|

-

|

|

|

-Nowadays, https://www.kaggle.com/datasets[Kaggle] has some great example datasets (requires a free account).

|

|

|

-

|

|

|

-* https://www.nist.gov/el/ammt-temps/datasets[NIST] has two excellent image classification datasets: handwritten numbers and fashion items - they are a great start for classification - almost every framework includes them. Both are also available at Kaggle - https://www.kaggle.com/datasets/hojjatk/mnist-dataset[numbers] and https://www.kaggle.com/datasets/zalando-research/fashionmnist[fashion].

|

|

|

-* https://casas.wsu.edu/datasets/[CASAS] has a _Human Activity Recognition from Continuous Ambient Sensor Data_ dataset - it is a very flexible regression dataset offering tons of opportunities (available for https://www.kaggle.com/datasets/utkarshx27/ambient-sensor-based-human-activity-recognition[download from Kaggle]).

|

|

|

-

|

|

|

-== Getting Running ==

|

|

|

-

|

|

|

-Create a system-independent Python installation. Trust me, you want to divorce it.

|

|

|

-

|

|

|

-https://conda-forge.org[Conda-Forge] is a community GitHub organisation containing repositories of https://conda.org[Conda] recipes.

|

|

|

-

|

|

|

-Conda is a _"language-agnostic, multi-platform package management ecosystem for projects"_.

|

|

|

-

|

|

|

-Conda installer is called https://conda-forge.org/download/[Miniforge].

|

|

|

-

|

|

|

-=== Installing and Preparing Conda ===

|

|

|

-

|

|

|

-Fresh installation:

|

|

|

-

|

|

|

-[subs="+quotes"]

|

|

|

-----

|

|

|

-$ *mkdir /opt/miniforge*

|

|

|

-$ *bash ~/Downloads/Miniforge3-24.11.3-2-MacOSX-arm64.sh -b -f -p /opt/miniforge*

|

|

|

-----

|

|

|

-

|

|

|

-For updates:

|

|

|

-

|

|

|

-[subs="+quotes"]

|

|

|

-----

|

|

|

-$ *bash ~/Downloads/Miniforge3-24.11.3-2-MacOSX-arm64.sh -b -f -u -p /opt/miniforge*

|

|

|

-----

|

|

|

-

|

|

|

-Obtain an activation script that is not embedded into your `bashrc`:

|

|

|

-

|

|

|

-[subs="+quotes"]

|

|

|

-----

|

|

|

-$ *mv ~/.bash_profile{,-backup}*

|

|

|

-$ *touch ~/.bash_profile*

|

|

|

-$ *mamba init bash*

|

|

|

-no change /opt/miniforge/condabin/conda

|

|

|

-...

|

|

|

-no change /opt/miniforge/etc/profile.d/conda.csh

|

|

|

-modified /foo/bar/.bash_profile

|

|

|

-

|

|

|

-==> For changes to take effect, close and re-open your current shell. <==

|

|

|

-

|

|

|

-Added mamba to /foo/bar/.bash_profile

|

|

|

-

|

|

|

-==> For changes to take effect, close and re-open your current shell. <==

|

|

|

-

|

|

|

-$ *(echo '#!/bin/false'; cat ~/.bash_profile) > conda-init.sh*

|

|

|

-$ *mv ~/.bash_profile{-backup,}*

|

|

|

-----

|

|

|

-

|

|

|

-For activation a any time, source the script:

|

|

|

-

|

|

|

-[subs="+quotes"]

|

|

|

-----

|

|

|

-$ *source conda-init.sh*

|

|

|

-(base) $

|

|

|

-----

|

|

|

-

|

|

|

-=== Creating Conda Environments ===

|

|

|

-

|

|

|

-You can create any number of environments in Conda.

|

|

|

-

|

|

|

-Let's create a couple: SciKit-Learn, PyTorch, and TensorFlow.

|

|

|

-

|

|

|

-Step one is always identifying the version of Python that the environment works best with, also in terms of all of its dependencies.

|

|

|

-

|

|

|

-Sometimes, the toolkit will suggest the steps for the package manager we chose (Conda). I propose you completely ignore this and just roll your own environment. It will be for the better once you hit some issues (and you will) - you will at least be familiar with the components you chose and the process of replacing them and/or adding more.

|

|

|

-

|

|

|

-Check https://www.python.org/downloads/[Current Python Release Status]. As of the time of this writing, 3.13 was the latest non-pre-release version.

|

|

|

-

|

|

|

-Cross-check with https://scikit-learn.org/stable/install.html[latest stable SciKit-Learn release].

|

|

|

-

|

|

|

-Create an environment description, say `env-sklearn-16.yml`:

|

|

|

-

|

|

|

-[source,yaml]

|

|

|

-----

|

|

|

----

|

|

|

-name: sklearn-16

|

|

|

-channels:

|

|

|

- - conda-forge

|

|

|

-dependencies:

|

|

|

- - python>=3.13,<3.14

|

|

|

- - numpy>=1.19.5

|

|

|

- - scipy>=1.6.0

|

|

|

- - scikit-learn>=1.6.1,<1.7.0

|

|

|

- - cython>=3.0.10

|

|

|

- - pandas>=1.1.5

|

|

|

- - matplotlib>=3.3.4

|

|

|

- - seaborn>=0.9.0

|

|

|

-...

|

|

|

-----

|

|

|

-

|

|

|

-Now tell `mamba` (or `conda`) to create it:

|

|

|

-

|

|

|

-[subs="+quotes"]

|

|

|

-----

|

|

|

-(base) $ *mamba env create -n sklearn-16 -f ./env-sklearn-16.yml*

|

|

|

-Channels:

|

|

|

- - conda-forge

|

|

|

-Platform: osx-arm64

|

|

|

-Collecting package metadata (repodata.json): done

|

|

|

-Solving environment: done

|

|

|

-

|

|

|

-Downloading and Extracting Packages:

|

|

|

-...

|

|

|

-

|

|

|

-Preparing transaction: done

|

|

|

-Verifying transaction: done

|

|

|

-Executing transaction: done

|

|

|

-...

|

|

|

-----

|

|

|

-

|

|

|

-Activate it (some checks along the way to show you how the entire thing works):

|

|

|

-

|

|

|

-[subs="+quotes"]

|

|

|

-----

|

|

|

-(base) $ *which python*

|

|

|

-/opt/miniforge/bin/python

|

|

|

-

|

|

|

-(base) $ *python --version*

|

|

|

-Python 3.12.9

|

|

|

-

|

|

|

-(base) $ *mamba env list*

|

|

|

-# conda environments:

|

|

|

-#

|

|

|

-base * /opt/miniforge

|

|

|

-sklearn-16 /opt/miniforge/envs/sklearn-16

|

|

|

-

|

|

|

-(base) $ *mamba activate sklearn-16*

|

|

|

-

|

|

|

-(sklearn-16) $ *which python*

|

|

|

-/opt/miniforge/envs/sklearn-16/bin/python

|

|

|

-

|

|

|

-(sklearn-16) $ *python --version*

|

|

|

-Python 3.13.2

|

|

|

-

|

|

|

-(sklearn-16) $ *python3*

|

|

|

-Python 3.13.2 | packaged by conda-forge | (main, Feb 17 2025, 14:02:48) [Clang 18.1.8 ] on darwin

|

|

|

-Type "help", "copyright", "credits" or "license" for more information.

|

|

|

-

|

|

|

->>> *import sklearn*

|

|

|

-

|

|

|

->>> *sklearn.show_versions()*

|

|

|

-

|

|

|

-System:

|

|

|

- python: 3.13.2 | packaged by conda-forge | (main, Feb 17 2025, 14:02:48) [Clang 18.1.8 ]

|

|

|

-executable: /opt/miniforge/envs/sklearn-16/bin/python3

|

|

|

- machine: macOS-15.4-arm64-arm-64bit-Mach-O

|

|

|

-

|

|

|

-Python dependencies:

|

|

|

- sklearn: 1.6.1

|

|

|

- pip: 25.0.1

|

|

|

- setuptools: 78.1.0

|

|

|

- numpy: 2.2.4

|

|

|

- scipy: 1.15.2

|

|

|

- Cython: 3.0.12

|

|

|

- pandas: 2.2.3

|

|

|

- matplotlib: 3.10.1

|

|

|

- joblib: 1.4.2

|

|

|

-threadpoolctl: 3.6.0

|

|

|

-

|

|

|

-Built with OpenMP: True

|

|

|

-

|

|

|

-threadpoolctl info:

|

|

|

- user_api: blas

|

|

|

- internal_api: openblas

|

|

|

- num_threads: 10

|

|

|

- prefix: libopenblas

|

|

|

- filepath: /opt/miniforge/envs/sklearn-16/lib/libopenblas.0.dylib

|

|

|

- version: 0.3.29

|

|

|

-threading_layer: openmp

|

|

|

- architecture: VORTEX

|

|

|

-

|

|

|

- user_api: openmp

|

|

|

- internal_api: openmp

|

|

|

- num_threads: 10

|

|

|

- prefix: libomp

|

|

|

- filepath: /opt/miniforge/envs/sklearn-16/lib/libomp.dylib

|

|

|

- version: None

|

|

|

-

|

|

|

->>> *exit()*

|

|

|

-----

|

|

|

-

|

|

|

-If you want to later update some of the environment components, you can do so by editing the env file and issuing the following command:

|

|

|

-

|

|

|

-[subs="+quotes"]

|

|

|

-----

|

|

|

-(sklearn-16) $ *mamba env update -f ./env-sklearn-16.yml*

|

|

|

-----

|

|

|

-+

|

|

|

-====

|

|

|

-WARNING: `env update` is always applied to _current_ environment.

|

|

|

-====

|

|

|

-

|

|

|

-You can do the same with other environments: PyTorch, TensorFlow, etc. These may even come with hardware acceleration support for your computer system.

|

|

|

-

|

|

|

-[source,yaml]

|

|

|

-----

|

|

|

----

|

|

|

-name: pytorch-26

|

|

|

-channels:

|

|

|

- - conda-forge

|

|

|

-dependencies:

|

|

|

- - python>=3.12,<3.13

|

|

|

- - numpy>=1.19.5

|

|

|

- - pandas>=1.1.5

|

|

|

- - matplotlib>=3.3.4

|

|

|

- - pytorch>=2.6,<2.7

|

|

|

-...

|

|

|

-----

|

|

|

-

|

|

|

-[subs="+quotes"]

|

|

|

-----

|

|

|

-(base) $ *mamba env create -n pytorch-26 -f ./env-pytorch-26.yml*

|

|

|

-----

|

|

|

-

|

|

|

-[source,yaml]

|

|

|

-----

|

|

|

----

|

|

|

-name: tensorflow-2.16

|

|

|

-channels:

|

|

|

- - apple

|

|

|

- - conda-forge

|

|

|

-dependencies:

|

|

|

- - python>=3.9

|

|

|

- - numpy>=1.19.5

|

|

|

- - pandas>=1.1.5

|

|

|

- - matplotlib>=3.3.4

|

|

|

- - tensorflow-deps

|

|

|

- - pip>=25.0

|

|

|

- - pip:

|

|

|

- - tensorflow-macos

|

|

|

- - tensorflow-metal

|

|

|

-...

|

|

|

-----

|

|

|

-

|

|

|

-[subs="+quotes"]

|

|

|

-----

|

|

|

-(base) $ *mamba env create -n tf-216 -f ./env-tf-216.yml*

|

|

|

-----

|

|

|

-

|

|

|

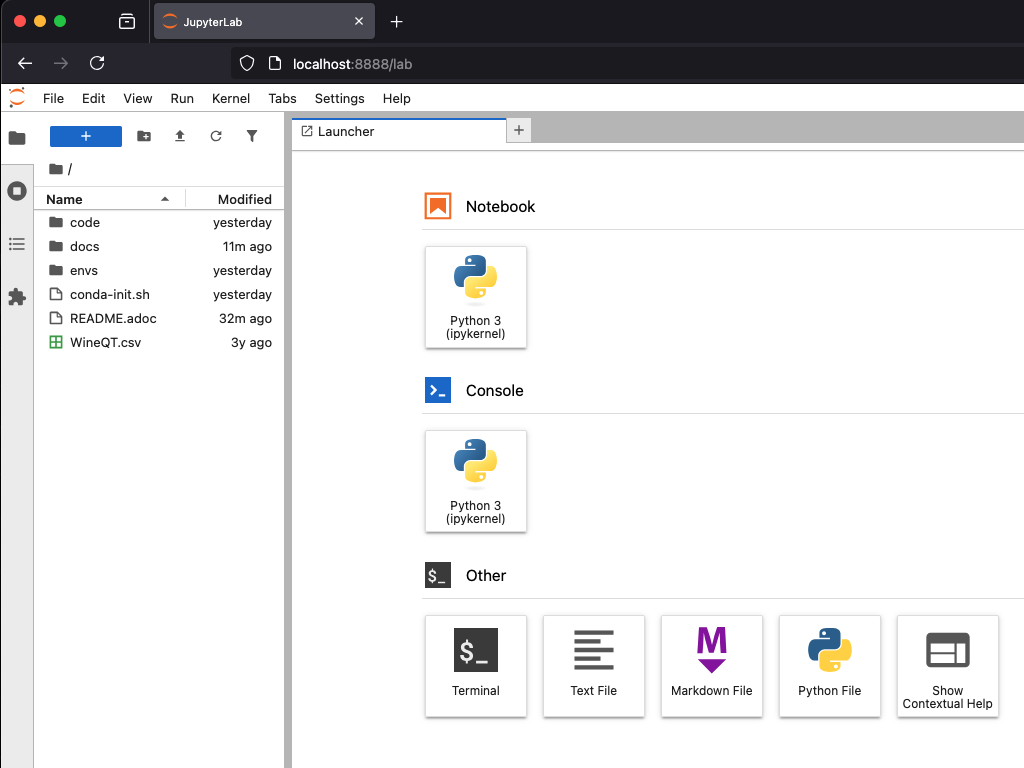

-=== What is JupyterLab? ===

|

|

|

-

|

|

|

-Try a workflow by writing a script. It's going to be a lot of re-running of the same code when testing it.

|

|

|

-

|

|

|

-There is an example script for two model types using SciKit-Learn called `wine-sklearn.py`. The second model is deliberately commented out because there is an issue with it.

|

|

|

-

|

|

|

-If you try figuring out what its problem is, you need to re-run the entire script every time you make a change, which is very awkward and time-consuming.

|

|

|

-

|

|

|

-Try executing the same workflow in an interactive interpreter by copying the script to a Python shell line by line. It's extremely inconvenient.

|

|

|

-

|

|

|

-Sometimes you want to return a couple of steps to change something about your data, and then re-run the training of a model. It is not very transparent what the state of your data is at the moment and what the correct order of steps should be.

|

|

|

-

|

|

|

-JupyterLab Notebooks were designed to resolve those problems by being something in between. You can run them as a script, but you can also run individual blocks of a notebook called _cells_ in isolation.

|

|

|

-

|

|

|

-Not only that - you can define different Python kernels which belong to various Conda environments, in the same JupyterLab instance, and simply associate your notebooks with the kernel they need, so that they can run in whichever environment you want them to.

|

|

|

-

|

|

|

-If you want to use them, the best way to do it is to install `jupyterlab` into the base environment.

|

|

|

-

|

|

|

-[subs="+quotes"]

|

|

|

-----

|

|

|

-(_whatever_) $ *mamba activate base*

|

|

|

-

|

|

|

-(base) $ *pip install jupyterlab*

|

|

|

-Collecting jupyterlab

|

|

|

-...

|

|

|

-Successfully installed MarkupSafe-3.0.2 anyio-4.9.0 appnope-0.1.4 argon2-cffi-23.1.0 argon2-cffi-bindings-21.2.0 arrow-1.3.0 asttokens-3.0.0 async-lru-2.0.5 attrs-25.3.0 babel-2.17.0 beautifulsoup4-4.13.3 bleach-6.2.0 comm-0.2.2 debugpy-1.8.13 decorator-5.2.1 defusedxml-0.7.1 executing-2.2.0 fastjsonschema-2.21.1 fqdn-1.5.1 h11-0.14.0 httpcore-1.0.7 httpx-0.28.1 ipykernel-6.29.5 ipython-9.1.0 ipython-pygments-lexers-1.1.1 isoduration-20.11.0 jedi-0.19.2 jinja2-3.1.6 json5-0.12.0 jsonschema-4.23.0 jsonschema-specifications-2024.10.1 jupyter-client-8.6.3 jupyter-core-5.7.2 jupyter-events-0.12.0 jupyter-lsp-2.2.5 jupyter-server-2.15.0 jupyter-server-terminals-0.5.3 jupyterlab-4.4.0 jupyterlab-pygments-0.3.0 jupyterlab-server-2.27.3 matplotlib-inline-0.1.7 mistune-3.1.3 nbclient-0.10.2 nbconvert-7.16.6 nbformat-5.10.4 nest-asyncio-1.6.0 notebook-shim-0.2.4 overrides-7.7.0 pandocfilters-1.5.1 parso-0.8.4 pexpect-4.9.0 prometheus-client-0.21.1 prompt_toolkit-3.0.50 psutil-7.0.0 ptyprocess-0.7.0 pure-eval-0.2.3 pygments-2.19.1 python-dateutil-2.9.0.post0 python-json-logger-3.3.0 pyyaml-6.0.2 pyzmq-26.4.0 referencing-0.36.2 rfc3339-validator-0.1.4 rfc3986-validator-0.1.1 rpds-py-0.24.0 send2trash-1.8.3 six-1.17.0 sniffio-1.3.1 soupsieve-2.6 stack_data-0.6.3 terminado-0.18.1 tinycss2-1.4.0 tornado-6.4.2 traitlets-5.14.3 types-python-dateutil-2.9.0.20241206 typing_extensions-4.13.1 uri-template-1.3.0 wcwidth-0.2.13 webcolors-24.11.1 webencodings-0.5.1 websocket-client-1.8.0

|

|

|

-----

|

|

|

-

|

|

|

-Starting Jupyter will automatically open it in your browser.

|

|

|

-

|

|

|

-[subs="+quotes"]

|

|

|

-----

|

|

|

-(base) $ *jupyter lab*

|

|

|

-[I 2025-04-07 14:54:37.059 ServerApp] jupyter_lsp | extension was successfully linked.

|

|

|

-...

|

|

|

-[I 2025-04-07 14:54:39.694 LabApp] Build is up to date

|

|

|

-----

|

|

|

-

|

|

|

-If you want to reopen it at any later point, you can point your browser to `\http://localhost:8888/lab` and it will reload the last state of the workbench before you closed it.

|

|

|

-

|

|

|

-=== Adding Conda Environments to JupyterLab ===

|

|

|

-

|

|

|

-Introduce Jupyter Kernels into the specific environments - while JupyterLab is running, install `ipykernel` into your environment and tell the module to register itself.

|

|

|

-

|

|

|

-[subs="+quotes"]

|

|

|

-----

|

|

|

-(base) $ *mamba activate sklearn-16*

|

|

|

-

|

|

|

-(sklearn-16) $ *pip install ipykernel*

|

|

|

-Collecting ipykernel

|

|

|

-...

|

|

|

-Successfully installed appnope-0.1.4 asttokens-3.0.0 comm-0.2.2 debugpy-1.8.13 decorator-5.2.1 executing-2.2.0 ipykernel-6.29.5 ipython-9.1.0 ipython-pygments-lexers-1.1.1 jedi-0.19.2 jupyter-client-8.6.3 jupyter-core-5.7.2 matplotlib-inline-0.1.7 nest-asyncio-1.6.0 parso-0.8.4 pexpect-4.9.0 platformdirs-4.3.7 prompt_toolkit-3.0.50 psutil-7.0.0 ptyprocess-0.7.0 pure-eval-0.2.3 pygments-2.19.1 pyzmq-26.4.0 stack_data-0.6.3 traitlets-5.14.3 wcwidth-0.2.13

|

|

|

-

|

|

|

-(sklearn-16) $ *python -mipykernel install --user --name=sklearn-16*

|

|

|

-Installed kernelspec sklearn-16 in /foo/bar/baz/Jupyter/kernels/sklearn-16

|

|

|

-----

|

|

|

-

|

|

|

-Do the same thing for the other two environments.

|

|

|

-

|

|

|

-Once you open a notebook, you can select the kernel you need to run it with in the top-right corner menu.

|

|

|

+* link:docs/GETTING_UP.adoc[Getting Up]: how to figure out what the hell this is all about

|

|

|

+* link:docs/GETTING_RUNNING.adoc[Getting Running]: how to set up your Conda environments so you can start playing

|

|

|

+* link:docs/JUPYTERLAB.adoc[JupyterLab]: modify your base Conda env to run JupyterLab and easily execute notebooks in other envs

|

|

|

|

|

|

== Magic Time ==

|

|

|

|

|

|

@@ -395,7 +21,8 @@ It has features using 11-dimension tensors describing a wine's chemical composit

|

|

|

The following files are available in this project:

|

|

|

|

|

|

`wine-sklearn.py`::

|

|

|

- A SciKit-Learn script that loads data, splits it into training and testing subsets, normalizes the features and trains a _C-Support Vector Classification_ model called `SVC` in SKLearn. It then proceeds to visualise the efficiency of the model using a _confusion matrix_ and a heatmap. The idea is that the commented part, training of a modified SVC called NuSVC, which has an issue, would demonstrate how awkward is testing and fixing the script.

|

|

|

-

|

|

|

+ A SciKit-Learn script that loads data, splits it into training and testing subsets, normalizes the features and trains a _C-Support Vector Classification_ model called `SVC` in SKLearn. It then proceeds to visualise the efficiency of the model using a _confusion matrix_ and a heatmap. The idea is that the commented part, training of a modified SVC called NuSVC, which has an issue, would demonstrate how awkward it is to test and fix the script by constantly re-running it.

|

|

|

|

|

|

+`wine-sklearn.ipynb`::

|

|

|

+ The same as the above script, only using a JupyterLab notebook. Because you can be selective about which cells to run, nothing is commented out. You are free to re-run sections of the notebook as often as you want, but of course - provisions have to be made for prerequisites.

|

|

|

|